When implementing a CRM system for an established Enterprise organisation, there are many systems to gather data from, to be able to provide a comprehensive customer view to the end-users of the software.

The 360 degrees customer view is normally obtained by combining real-time integrations with external systems (such as accounting software, ERP, recommendation engines etc.) with an extensive process of data migration including data mapping, data scrubbing, manipulation and import from existing systems that will eventually be decommissioned.

Today I will focus specifically on the subject of performing a sizeable initial data load from a system to Sugar’s CRM system.

Data migrations

When approaching complex and large data migrations, usually the organisation’s database administrators get involved as well. With DBAs point of view being mostly data centric, the preferred approach is normally to understand Sugar’s DB schema, slice and dice the data, and finally migrate data from database to database.

The challenge with this approach is that Sugar is not just a data placeholder, but a complex web based application where the application layer can have run-time interactions with the data as well (eg: by creating related entities wherever required, by communicating data changes to downstream systems like Elasticsearch etc.). Based on my experience, I found that direct database queries normally add too much complexity and unnecessary overhead to the project, by trying to understand in full and possibly re-build the application layer, to complete the missing actions after the actual data import.

Custom bulk import upsert API

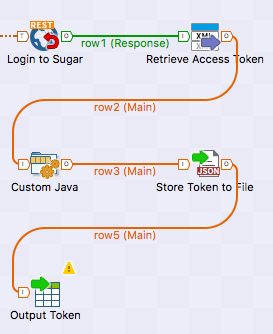

The next logical step is to look at the application’s provided RESTful API. When the amount of data starts to stretch import times with just the CRUD Sugar API, it is possible to enhance the rich Sugar framework with additional custom RESTful APIs that can help us with the import process’ timing.

Having implemented numerous initial data loads as well as bulk imports, the most common approaches to enhance the import data speed are the followings:

- Reduce the number of API calls required to achieve the final outcome

- Increase the number of records that can be processed at any one time with a single API call

- Decrease lookup time when dealing with existing records

- Decrease save time by removing or queueing in the background non-essential actions during import

- Execute data imports in parallel wherever possible, for independent entities

With the above points in mind, I went ahead and built a bulk upsert (update or insert based on the lookup unique key) tool for the SugarCRM Community to help with the import process and shared it on the SugarCRM Labs BulkImport git repository.

The API accepts multiple records per request and it is optimised for performance. Is it possible to configure:

- Modules available for import by the Admin users via the bulk upsert API

- SQL lookup statement to execute for each record, based on a known external unique identifier, to proceed either with an update or an insert

- External unique identifier location within Sugar

- Custom logic definition either before and/or after processing of each record

The example can cover most use cases either with Sugar being the master of the imported data going forward, or with an external system still being the data master.

To achieve the best throughput I recommend to disable all non business critical operations during initial data loads (eg: if not required on initial load disable all workflows, logic hooks and other synchronous actions).

Benefits

The clear benefits brought by the custom API for extensive data import and initial data loads are summarised below:

- Flexibility, by leveraging in full the underlying framework

- Multiple records can be inserted with one REST call

- Performance is greatly improved by implementing various strategies (eg: upserts, multiple operations in one call, multiple records per call, completing lookup with sql queries only, disable activity streams for imported records etc.)

- The application layer’s logic is triggered automatically (eg: audit logs, logic hooks, workflows)

- Related and downstream systems are automatically made aware of data changing (eg: Elasticsearch)

- Accessible only to Admin users

- It provides a great starting point to build upon. It will work for most scenarios as-is with minor configurations

Additional thoughts

To conclude the post, I wanted to list few additional thoughts about what else needs to be taken care of, when completing complex data migration and imports:

- Start building the ETL import processes really early, as it is really crucial to get it right to not miss go-live deadlines. It might even help your architects find the best data structure for the new system by proceeding with agile iterations. Basically treat the data load as a separate functional stream that evolves while additional functionality is delivered during the project’s sprints

- When building the ETL process, make sure all entities are imported in the right order (start from the entities with no data dependencies and when dealing with entities with dependencies, make sure they are all met)

- The ETL process should be optimised to insert as many entities in parallel as possible, as long as they are unrelated to each other

- Define with the business if there are portions of data (as in full entities, or even just a subset of records) that can be imported after go-live, so that those entities can trickle slowly after go-live, decreasing the required downtime

- The overall process should be initially tested with a small working data set that will need to be produced for the specific purpose

- The process should be tested, tweaked and optimised multiple times, until the full data load duration is acceptable for both IT and the business

- During data load tests, make sure the system’s infrastructure is sized correctly to sustain both the user’s load and the data import load as well

- Take care of disabling all unnecessary logic that should not be triggered during data import such as new workflows, customer welcome emails, record’s assignment emails etc.

- Make sure the DBAs are involved to actively find potential database bottlenecks and optimise your lookup queries

- Successful data load processes require a reliable way to compare data input vs data output to make sure that only actual incomplete (as in broken) data is not imported, and all the remaining records match. This can easily be a database to database comparison tool that can flag differences

All the best with your next data migration

Enrico,

This article comes to hearty as I work with are migrations, ETL, and data integrations. I work with Apache Camel for the routing and mediation.

I have some burning questions that relate to the article.

1) Given multithreaded capabilities what have you found the fastest

a) parallel /POST, /PUT, /GET, etc operations

b) parallel /bulk operations. I find that the parallel bulk api fluctuates for some reason in performance.

2) With your bulkupserting does it have the capability to turn of activity stream and tracker while bulk upserting?

This question kind of unrelated, but I would go crazy if you did a best practice on ETL and relating records. I am curious how you guys approach that. Whether its best to first upsert all the module records and then approach what records need to be related after all the module datasets have been sync’d.

Hi Michael,

Thanks for reaching out on my blog!

It really does depend on what you are trying to do and the specific scenario. You can execute parallel API calls with Sugar, for all the methodologies you mentioned above.

When I have built the BulkImport API, it was not only to have maximum speed of operation, but also to facilitate the overall tasks of initial data load and ongoing data imports, while still performing all the framework specific actions.

If I had to select the top three features of the API, from my point of view those are:

Yes, the BulkImport API has indeed activity stream record creation disabled by default. As tracker is disabled by default on Sugar, on my projects I tend to leave it disabled or recommend to do so, as it can be resource intensive. Based on your feedback I’ve just made sure it is disabled when leveraging the BulkImport API, if you do have it enabled (see: https://github.com/sugarcrmlabs/BulkImport/pull/14/files).

In regards to relating records, with the BulkImport API you have as much flexibility as you need. You would have to find an approach that works best for you, to guarantee the shortest path to get the full dataset into the system.

If the customer has the possibility of waiting, you could process all the inserts first, and then start processing all the relationships.

Alternatively you would build the parallel steps to get the data required into the system, then complete some serial steps, and then some more parallel steps as you see fit.

As an example you could start by importing Users (and setup Teams/Roles etc) by themselves first. Then start importing Accounts and Contacts in parallel (and maybe Products and all other modules that might come useful later, that are not related to Accounts or Contacts), and assign them to the correct Users with custom BulkImport api hooks. Once those objects are imported, you can start relating those objects between themselves, while in parallel inserting other main objects such as Opportunities. Then once they are completed, you can start another run of relationships and inserts in parallel, until you are done with all objects and relationships.

You really have the possibility of mixing and matching parallel/serial, as long as you do not miss dependencies along the way, or do all objects first, and then all the relationships.

Hope this helps you further in your data loading process

Good luck!

Enrico,

That was well put. Based on the scenario of a heavy initial load, I would populate the modules first in parallel possibly, and then go back and related the entities.

I want to start using your BulkApi and assist with PR’s. What I have done a little bit differently is establish a cache of external_keys and update the cache on the inserts for new records.

I also thought it would be nice to relate Contacts to Account by sending an array of external_keys for Contacts (for example) and the external_key for the Account.

Thanks for answering.

Was wondering, any reason why you haven’t also suggested an ETL tool instead, something like Talend Open Studio or others.

Curious about your reasons

In the meantime, thanks for all your contributions over the years.

Hi Vincent,

Thank you for your kind words and no problem!

I did indeed mention ETLs as tools to initiate the data move, but I recommended the API approach as receiving end of the integration/data load process.

All the best